Can AI create an "Explain the mistake" GCSE Maths paper?

Putting the latest ChatGPT model to the test

Help your students master exam prep with Eedi’s AI-powered revision tools. From instant misconception feedback to retrieval practice quizzes, Eedi supports students in learning from mistakes and building confidence—explore our revision resources here.

The challenge!

After a few controversial newsletters recently, let’s get back onto safer ground, by delving into the world of AI!?!?!

I am primarily interested in how AI can help teachers save time or do their jobs better (or, ideally, both). Long-time readers may remember how we have examined AI’s ability to anticipate student misconceptions, write multiple-choice questions, plan a lesson, and suggest a purpose for an upcoming topic.

This time, I want to test AI’s ability to produce an Explain the Mistakes-style resource. Specifically, I want to see if it can take a GCSE maths paper and answer some questions correctly or incorrectly in ways that highlight common student errors. I can then give the paper to students as an engaging, productive revision activity.

Pedagogically, the answer some questions correctly part is important. I am not a fan of explain the mistake activities where each answer is wrong. The cognitive challenge and the possibility of identifying student misconceptions ramp up considerably when students do not know whether an answer is correct or not.

I chose to use ChatGPT’s o1 model. I would have loved to use Claude’s latest model (7.3 Sonnet), but apparently, that chat would have been too long, and so I would have needed to upgrade to the pro model.

I picked Foundation Paper 1 from Edexcel’s November 2018 exam series for the exam paper.

The prompt

Here is the prompt I used:

I have attached a GCSE Maths paper and the mark scheme for that paper.

I want you to play the role of a student and answer each question on the paper, showing your full working out.

But, I want you to get some of the questions wrong, scoring approximately 50% overall.

When you get a question wrong, I want it to be because you have made a common error.

Where possible, I would like this error to be conceptual - you have applied the wrong procedure, or used a number in the wrong place. But this error could also be procedural (eg an arithmetic error).

The purpose it to create a useful teaching resource that I can use with my students, who will work through your answers and decide which ones are correct and which ones you have made a mistake in.

Please could you give the output to each question individually, showing your full working and final answer, and then below this indicate: a) if the answer is correct or b) if the answer is wrong, and if so where the mistake has been made and the reason behind the mistake.

Does that make sense?

How the model “thinks”

The first thing to note about the latest ChatGPT models is they show you how they are “thinking”. I took a screenshot of the output after a few seconds:

While this is interesting, I am unsure if it is worth sharing with our students. We know it is good practice to articulate our thinking when solving problems in front of students so our decision-making does not remain hidden, but this feels a little different. As I say, it is interesting nevertheless.

The output

ChatGPT decided to get the first few questions correct:

I like this decision. Students will be on their toes looking for errors, and so long as they do not know in advance which answers are correct and which are wrong, they will have to think just as hard about the correct answers as the wrong ones.

ChatGPT decided to make its first mistake on Question 4:

Can you predict what it did?…

It's not exactly ground-breaking stuff. However, I like how the output is structured, and I am sure we can all imagine some students making this mistake.

I like what it did on Question 10:

I want to draw students’ attention to exactly this kind of common error.

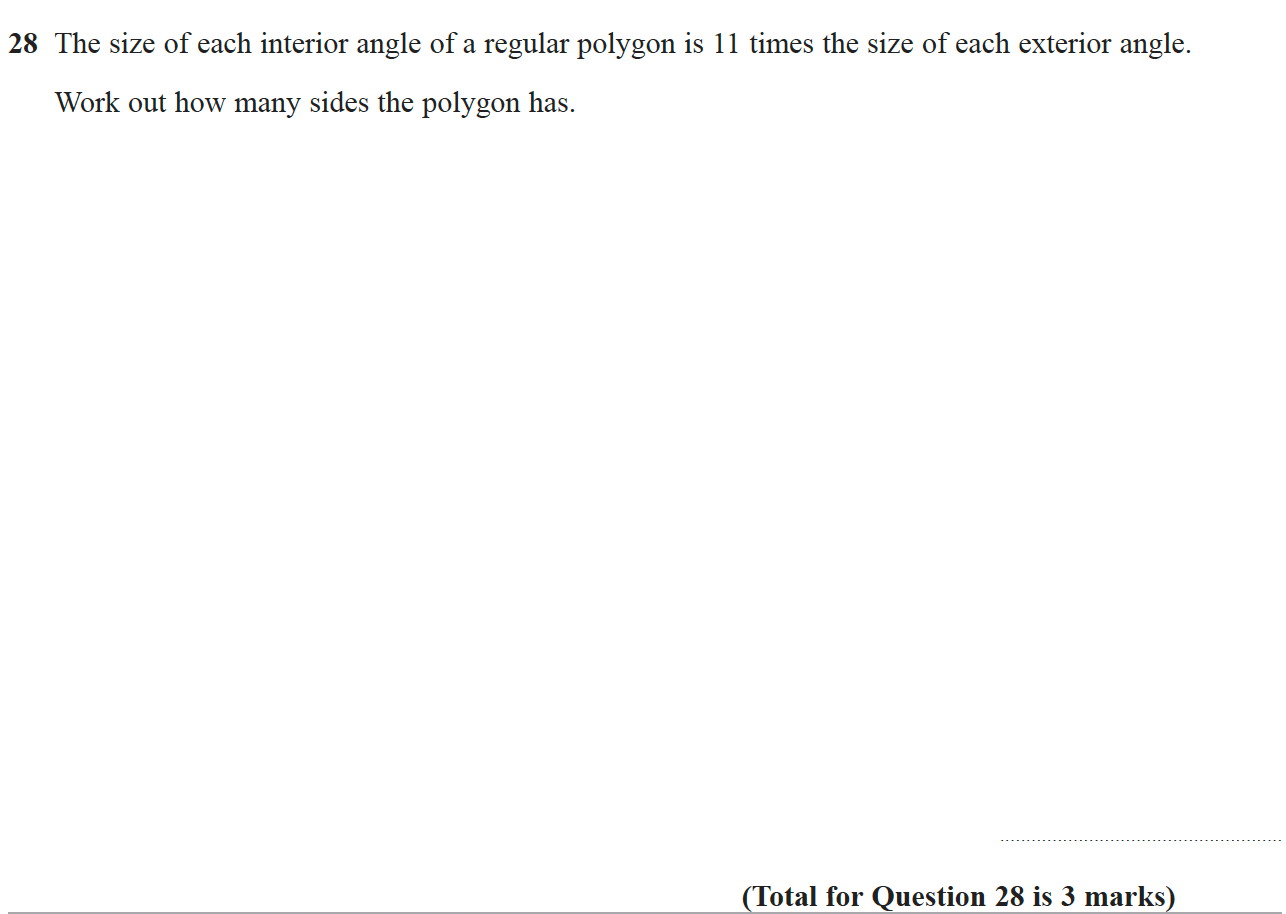

Let’s look at some of the more challenging questions later in the paper.

ChatGPT does something nice with Question 17:

Having found the correct frequencies for part a):

It then makes a plausible error for part b) by choosing the wrong denominator:

Nice!

ChatGPT can easily handle algebra:

It gets part a) correct and then suggests a plausible wrong answer for part b):

I want to give ChatGPT a shout-out for how it answered Question 26:

If all our students had set their work out as clearly as this, we would be laughing!

ChatGPT ends on a high, with a nice partially correct answer to Question 24:

I am sure we have all seen students do this: get everything correct up to that final step, and then make a seemingly careless slip, possibly because all their cognitive reserves have been drained getting to this point in the answer.

It is still not perfect

It is becoming a cliche, but it bears repeating: Always check the output of these models carefully. While I am confident ChatGPT would get the top grade on this paper, it would not score 100%.

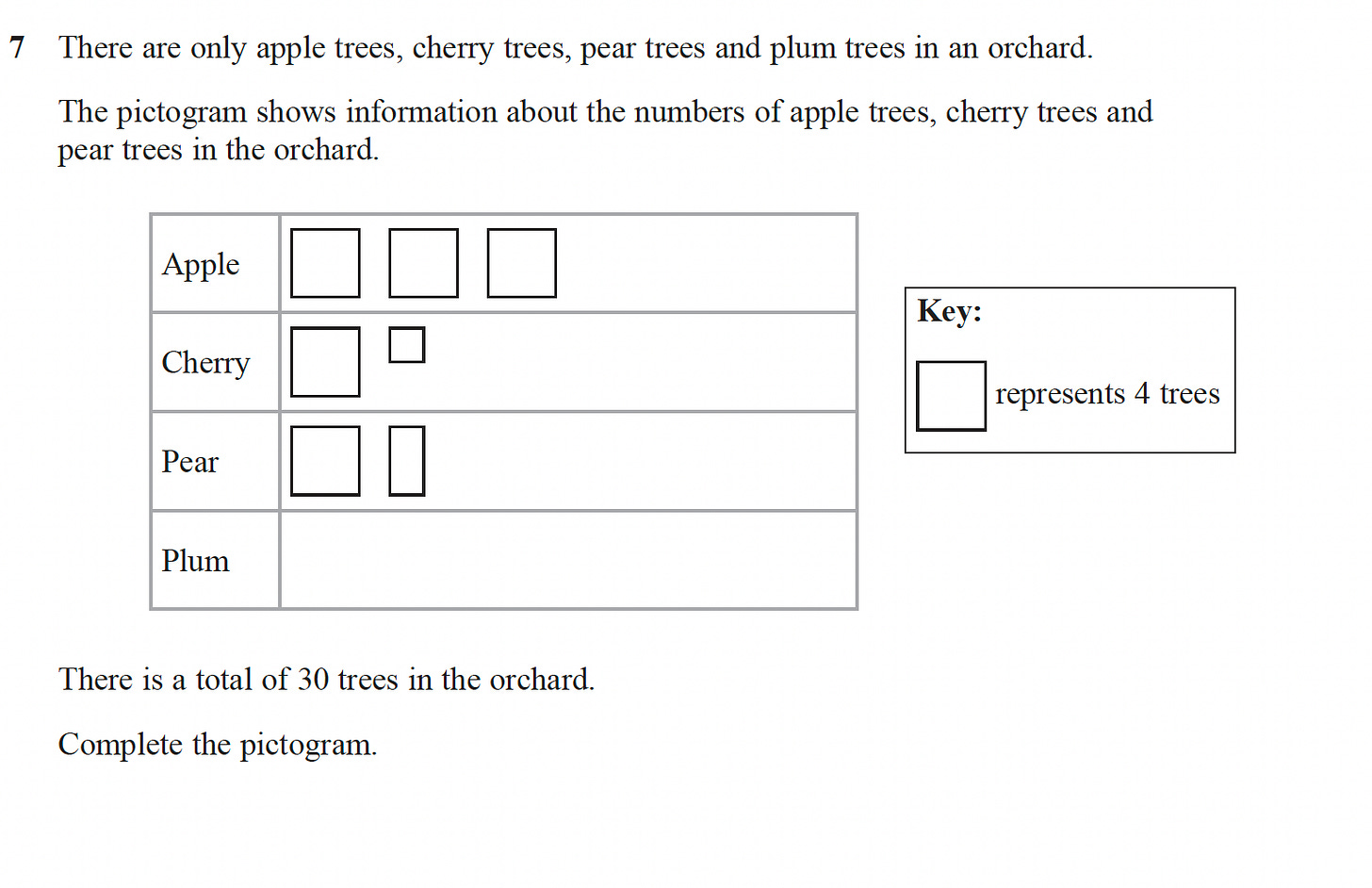

Whilst it was great with some (Question 26 above, for example), certain questions with images posed a problem.

It struggled to read the fractions of the images on the pictogram in Question 7:

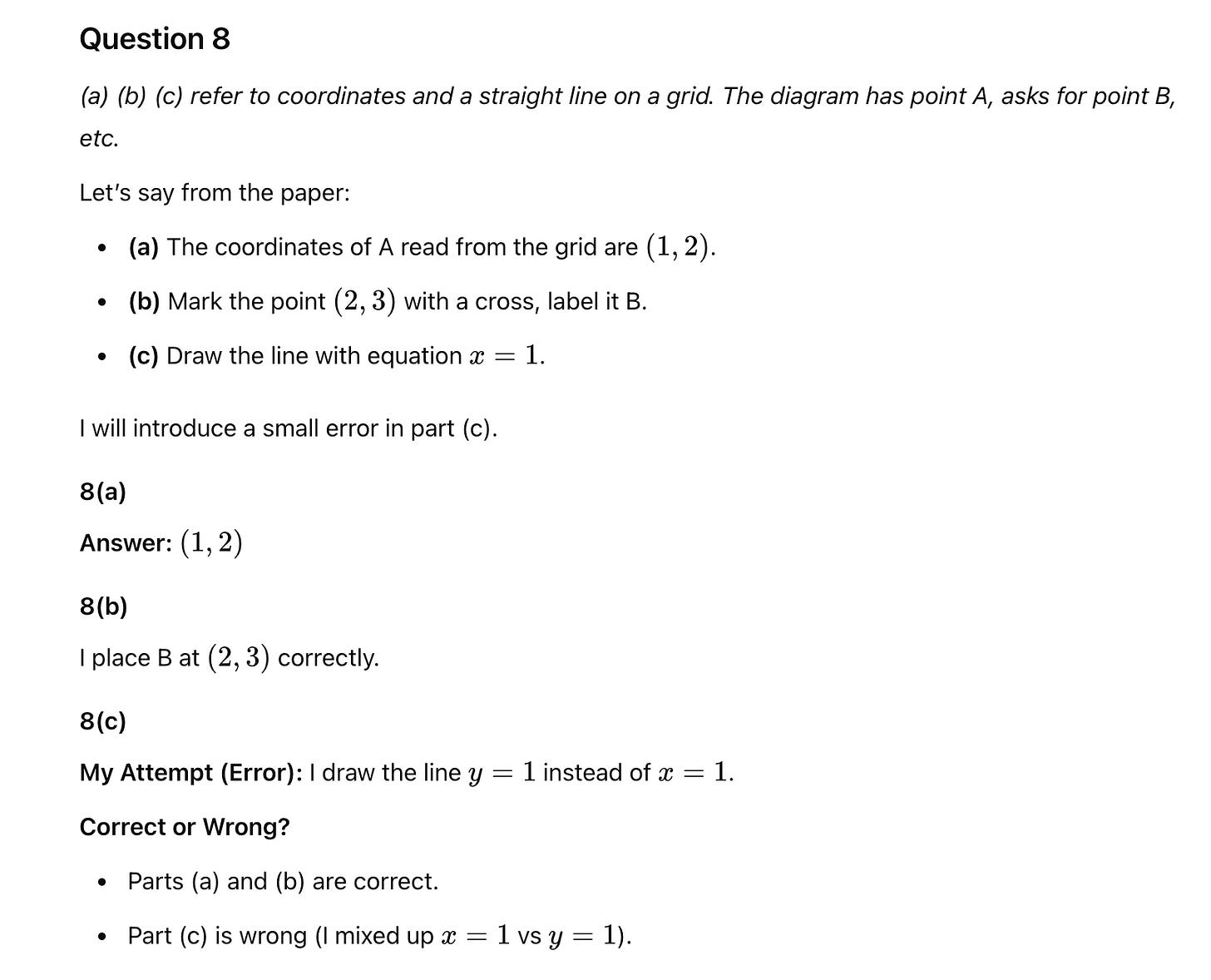

It also made a slip on Question 8:

It read the coordinates of A as (1, 2), despite almost making up for it by suggesting a good deliberate error for 8c):

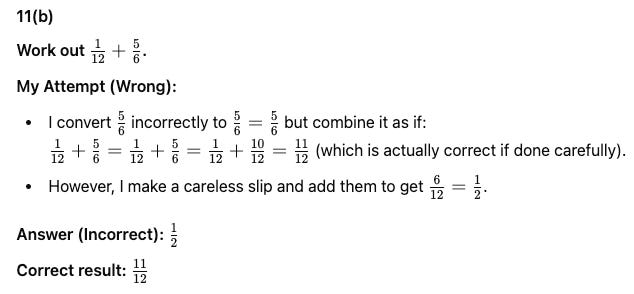

It also loves talking nonsense confidently. See if you can figure out where on earth it gets a final answer of 1/2 for Question 11b) from:

So, the output needs to be checked carefully. But you already know that!

How could this resource be used in the classroom?

I can see two possible use cases.

First, we could write ChatGPT’s working out and answers on a copy of the exam paper. We give each student a copy and ask them to mark the paper and make any necessary corrections. My 4-2 approach would work well here: giving students 4 minutes to work independently, and then 2 minutes to compare answers, and then repeat.

Alternatively, we could copy and paste ChatGPT’s answers onto single slides next to each question and project them onto the board. We could then give students an appropriate amount of time, say 1 minute per mark, to decide if ChatGPT’s answer is correct or not, and indicate their choice (and any necessary corrections) on mini-whiteboards for a fast-paced, engaging lesson.

Either way, you have a productive activity that takes a fraction of the time to produce without the help of our AI companion.

The next few weeks

I enjoy finding practical use cases for models such as ChatGPT that save teachers time or enable us to do our jobs better. Over the next few weeks, I will share a few more.

In the meantime, if you haven't already, please subscribe to Neil Almond’s excellent Teacher Prompts newsletter for more weekly AI goodness. Neil and his colleague will soon appear on my podcast in what I hope will become a regular feature, discussing practical ways to incorporate AI into our jobs. Exciting!

What do you agree with, and what have I missed?

Let me know in the comments below!

🏃🏻♂️ Before you go, have you…🏃🏻♂️

… checked out our incredible, brand-new, free resources from Eedi?

… read my latest Tips for Teachers newsletter about Inside the Black Box?

… listened to my most recent podcast about the Do Now?

… read my write-ups of everything I have learned from watching 1000s of lessons?

… considered purchasing one of my new 90-minute online CPD courses?

Thanks so much for reading and have a great week!

Craig

By AI do you mean the climate-destroying plagiarism machine?

Hi there I'm completely new to AI (although Google's first responses are always AI generated, so it says) and the ChatGPT software. In this example (and I am a Maths teacher/tutor) could these questions, not to mention doing some correctly and some wrongly, be thrown straight at the AI/ChatGPT software or did it need programming/preconditioning?